Hortonworks Sandbox Ssh Key Generator

- Free Online Private and Public Key Generator; Generate online private and public key for ssh, putty, github, bitbucket Save both of keys on your computer (text file, dropbox, evernote etc)!!! The generated keys are RANDOM and CAN'T be restored. Generate online private and public key for ssh, putty, github, bitbucket.

- Make an entry in hosts file in windows with sandbox ip address and assign name 192.168.183.141 hdpsandbox Then open your browser and type hdpsandbox:8888 – BruceWayne Jan 1 '16 at 8:53 Nope, that didn't work either.

- Using Hortonworks Virtual Sandbox 6 Step 1-b: Select the Hortonworks Virtual Sandbox AMI. Select the ‘Community AMIs’ tab. In the “Viewing:” pull-down menu, make sure it is set to either “All Images” or “Public Images”. Using the Search input box, enter “hortonworks” and press the return key.

- Notes on installing Hortonworks Hadoop Sandbox - I - hadoop-sandbox.md. Clone via HTTPS Clone with Git or checkout with SVN using the repository’s web address.

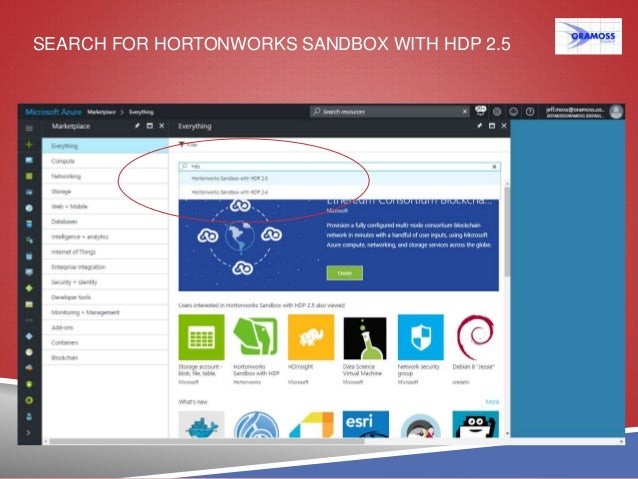

- Products Hortonworks Data Platform (HDP) Sandbox. For a step-by-step guide on how to deploy the Hortonworks Sandbox on Azure, visit: Deploying Hortonworks Sandbox on Microsoft Azure. Already Set Up and Looking to Learn? There are a series of tutorials to get you going with HDP fast.

- Ssh Key Github

- Ssh Key Setup

- Hortonworks Sandbox Setup

- Hortonworks Sandbox Password

- Hortonworks Sandbox Ssh

To enter in BIOS, while starting your machine use F10 key (Its for my HP Laptop, you can fin d according to your machine) Download Hortonworks S anbox Step 2: Download Hortonworks Sandbox for windows from below location. Jun 07, 2017 Step-by-step instructions on how to install single node Hadoop cluster on Ubuntu 14.04 using Ambari. Hortonworks also provides sandbox images for VirtualBox, VMware. Generate SSH keys. Go to.ssh folder and generate ssh keys.

Late last year, the news of the merger between Hortonworks and Cloudera shook the industry and gave birth to the new Cloudera – the combined company with a focus on being an Enterprise Data Cloud leader and a product offering that spans from edge to AI. One of the most promising technology areas in this merger that already had a high growth potential and is poised for even more growth is the Data-in-Motion platform called Hortonworks DataFlow (HDF). It is a key capability that will address the needs of our combined customer base in areas of real-time streaming architectures and Internet-of-Things (IoT). HDF is already a highly successful product offering with hundreds of customers like ClearSense, Trimble, Hilton etc.

So, what happens to HDF in the new Cloudera? What should customers expect? The good news is that it will still remain strategic to our company as well as to our customers. So, HDF is now reborn as Cloudera DataFlow (CDF).

What is Cloudera DataFlow?

Ssh Key Github

Cloudera DataFlow (CDF) is a scalable, real-time streaming data platform that collects, curates, and analyzes data so customers gain key insights for immediate actionable intelligence. It meets the challenges faced with data-in-motion, such as real-time stream processing, data provenance, and data ingestion from IoT devices and other streaming sources. Built on 100% open source technology, CDF helps you deliver a better customer experience, boost your operational efficiency and stay ahead of the competition across all your strategic digital initiatives.

With the rise of streaming architectures and digital transformation initiatives everywhere, enterprises are struggling to find comprehensive tools for data management to handle high volumes of high-velocity streaming data. CDF, as an end-to-end streaming data platform, emerges as a clear solution for managing data from the edge all the way to the enterprise. It can handle edge data collection, data ingestion, transformation, curation, data enrichment, content routing, processing multiple streams at IoT scale and analyzing those in real-time to gain actionable intelligence. CDF can do this within a common framework that offers unified security, governance and management.

The key aspects of the CDF platform are –

- Edge Data Management – Set up hundreds of MiNiFi agents in or near edge devices to enable edge data collection, content filtering, routing etc. This allows you to take on complex, distributed use cases such as connecting hundreds of retail stores across the country or getting data from thousands of utility sensors from your consumer edge. This is going to be a significant area of investment for us given our customer interest, the industry trends and the market potential.

- Flow Management – Adopt a no-code approach to create visual flows for building complex data ingestion / transformation with drag-and-drop ease. Powered by Apache NiFi and its 260+ pre-built processors, CDF enables you to take on extremely high-scale, high-volume and high-speed data ingestion use cases with simplicity and ease.

- Stream Processing – Manage and process multiple streams of real-time data using the most advanced distributed stream processing system – Apache Kafka. Process millions of real-time messages per second to feed into your data lake or for immediate streaming analytics.

- Streaming Analytics – Analyze millions of streams of data in real-time using advanced techniques such as aggregations, time-based windowing, content-filtering etc., to generate key insights and actionable intelligence for predictive and prescriptive analytics. CDF is the only streaming platform to offer a choice of 3 different streaming analytics solutions – Apache Storm, Kafka Streams and Apache Spark Streaming.

- Enterprise Services – Leverage a common set of enterprise services for unified security, governance and single sign-on across the entire Cloudera DataFlow platform. This makes the platform experience truly enriching when the same set of services make the interoperability between components seamless.

Why Cloudera DataFlow?

Serial key AGE OF EMPIRES III: PTMGF-28VKB-2W934-482QH-98623 Serial key AGE OF EMPIRES III the WARCHIEFS: KJG93-HDPGB-PXBPP-TFB49-9DBVB Serial key AGE OF EMPIRES III the ASIAN DYNASTIES: QRR4P-F4FDP-H986R-RF6P3-7QK3R.

CDF addresses a wide range of uses like Customer 360, data movement between data centers (on-premises and cloud), data ingestion from real-time streaming sources, log data ingestion and processing, streaming analytics etc. CDF also addresses a wide spectrum of IoT-specific use cases like Predictive Maintenance, Asset Tracking, Patient Monitoring, Utility Monitoring, Smart Cities etc. CDF is the only comprehensive streaming data platform in the market that is 100% open source and also offers a choice of three streaming analytics engines. CDF is the only platform in the market to offer out-of-the-box data provenance on streaming data. With an extremely strong community behind it, Apache NiFi powers CDF’s Flow Management capabilities with over 260+ pre-built processors for data source connectivity, ingestion, transformation and content routing.

To learn more about Cloudera DataFlow, attend our upcoming webinar on Feb 13th, 2019.

Dinesh Chandrasekhar (@AppInt4All) is a technology evangelist, a thought leader and a seasoned product marketer with over 24+ years of industry experience. He has an impressive track record of taking new integration/mobile/IoT/Big Data products to market with a clear GTM strategy of pre-and-post launch activities. Dinesh has extensive experience working on enterprise software as well as SaaS products delivering sophisticated solutions for customers with complex architectures. As a Lean Six Sigma Green Belt, he has been the champion for Digital Transformation at companies like Software AG, CA Technologies and IBM. Dinesh’s areas of expertise include IoT, Application/Data integration, BPM, Analytics, B2B, API management, Microservices, and Mobility. He is proficient in use cases across multiple industry verticals like retail, manufacturing, utilities, and healthcare. He is a prolific speaker, blogger, and a weekend coder. He currently works at Cloudera, managing their Data-in-Motion product line. He is fascinated by new technology trends including blockchain and deep learning. Dinesh holds an MBA degree from Santa Clara University and a Master’s degree in Computer Applications from the University of Madras.

Editor's Choice

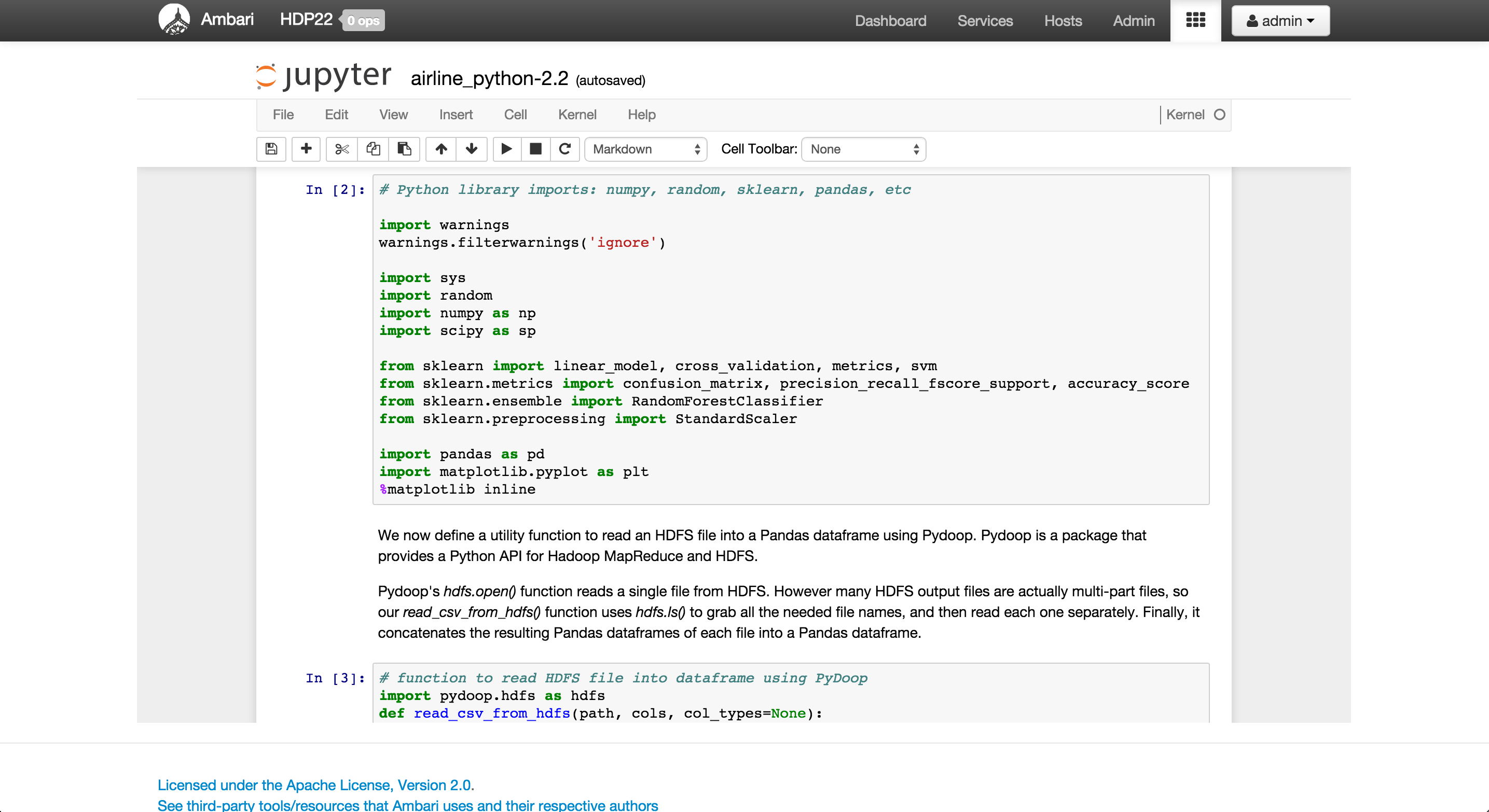

Installing a single node hadoop cluster is not a straight forward task. It involves a bunch of different things like creating users and groups to enabling password-less ssh. Thanks to virtualization technology and hortonworks' pre-configured OS images with Hadoop and a few of its ecosystem components, the task has been greatly simplified. Though this does not enable a first time Hadoop user to learn about the system level Hadoop complexities, it simplifies administration and deployment. The user can now focus on data management and analysis.

Downloads

The 2.4GB image for the Hortonworks Hadoop sandbox can be downloaded from [here] (http://hortonworks.com/products/hortonworks-sandbox/#install). I have chosen Oracle's VirtualBox as the virtualization technology. It can be downloaded from [here] (https://www.virtualbox.org/wiki/Downloads)

Configuration

I have tried installing VirtualBox on my Windows 8 PC, that has 4GB of RAM. The documentation clearly states that if Ambari and/or HBase have to be enabled and used, a machine with atleast 8GB of RAM is required.

When importing the sandbox image into VirtualBox, the guest OS is allocated 2GB of RAM. As more RAM is given to the guest OS, the faster it performs. Hortonworks recommends atleast 4GB of RAM for the guest OS. The guest OS image is a CentOS 64-bit Operating System.

Importing the image gave me an error, VT-X disabled in the BIOS. The error message suggests that virtualization support is non-existant or disabled on my processor. The securable [tool] (https://www.grc.com/securable.htm) can be downloaded and executed to check if your processor supports virtualization.

If virtualization is supported, the BIOS on the PC would have an option to turn it on. It has to be enabled and saved.

Start

Ssh Key Setup

When the sandbox boots up, a lot of services are started, including but not limited to - Hadoop namenodes, Hive, Pig, Oozie and supporting database servers.

The sandbox also supports an advanced UI for Hadoop called HUE. HUE can be accessed on the host machine browser at address http://127.0.0.1:8888.

HUE allows a user to run Hive or Pig scripts, import and export data, do user administration for hadoop etc.

Hortonworks Sandbox Setup

With keys Alt + F5, or using ssh, a user is allowed to login into the sandbox.

The username and password for login are root and hadoop.

Hortonworks Sandbox Password

Starcraft 2 free key generator. Once logged in, the different processes can be examined by the ps command. The hadoop conf directory is at /etc/hadoop/conf. The examination of the hdfs-site.xml in the conf directory gives a clue as to the hdfs location on the disk of the sandbox. The sandbox has the hdfs location at /hadoop/hdfs/data.

Hortonworks Sandbox Ssh

Will be dissecting the sandbox a little bit more in the next post.